German Version of this Article

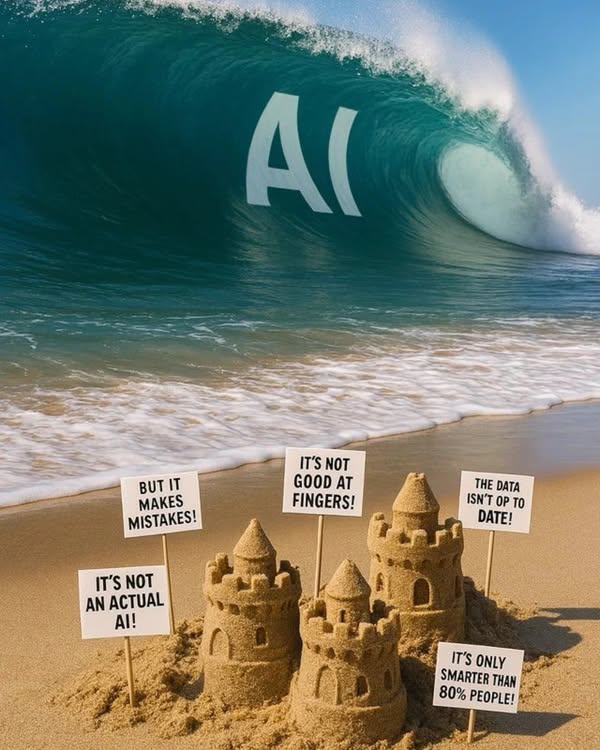

AI Denial

With all the recent breakthroughs in AI one would expect that most people have woken up to the fact that some really big changes are coming. Well sure, some people are ignorant but at least most people with scientific or technical education would get it? And people with some interests in politics would be worried about the implications for our society, right?

What puzzles me is that even almost 3 years after the release of ChatGPT people do not get it. Otherwise intelligent, educated people with some political awareness do not get it. In this text I try to explore why that is.

I was reading about the possibilities of AI getting human level intelligence some 25 or 30 years back. I read from Kurzweil and Yudkowsky back then. While not sure if things would turn out as they predicted it, as an IT person I understood Moore's Law and the prediction back then was that around 2025 computers would get more computing power then the human brain has. Which would not automatically mean that AI would be as intelligent as we are but it would only be a matter of the right software. I learned about AI and the backpropagation algorithm some 40 years ago and even then it seamed plausible that this would be a building block for building human like AI, once the computing power would scale up and even with some difficulty in software: given the exponential growth that Moore's Law is all about it would only be a matter of time until we would get human level AI. And even if the future is never a 100% curtain: The possibility that we would get there and all its implication for the world that it would bring would have mandated that we at least think of the possibilities and kind of prepare for it. But nothing much happend.

So when ChatGPT came out in November of 2022 I was not really surprised. After all this was following the predicted curve. So I was sure that with this evidence that, indeed we made huge leaps towards AGI ("artificial general intelligence" - human level AI), that this would serve as a wake-up call.

Sure, some non-technical people overestimated the capabilities of the initial ChatGPT and really attributed more "intelligence" to it then it had. But at least for technical people it should have been clear that this is only the beginning. ChatGPT and soon other systems were only giving answers out of "intuition" developed from being trained on large datasets but other then that intuition there was no "thinking" involved. But the clear logical next step would be to combine this "intuition" with a "loop" that fed it back to the system to "check" the validity and that this would result in system that could very well do logical reasoning and could do it combined with the huge "intuition database" that is encoded in its weight and that represents all of human knowledge. This is what we got with ChatGPT o1 and o3 and other "reasoning" models.

But even with all of this, even now where we are obviously on the brink of AGI, a lot of people still assume that life will go on as they know it. ChatGPT and creating images and videos is some nice curiosity to them but other then that they think that their jobs is save. About a year ago, a person who was doing actual AI research told us that we only need to worry about "narrow AI" - AI that is only capable of specific tasks but that human-level AGI will be impossible or maybe like 300 years into the future!!

So what is behind the AI denial?

Underestimating exponential growth

This is what we have seen during the corona-pandemic. People where not worried much because there where only a few cases. The fact that with exponential growth even a small number of cases could soon turn into complete disaster was not apparent to them. One would hope that technically and scientifically trained people would not fall for this but "normal" people tend to extrapolate new development in a linear fashion. If it has this much capabilities this year and it had a bit less capabilities a year ago then their "intuition" tells them that we are decades away from significant new progress.

Attributing supernatural and divine powers to the human brain

For thousands of years, people have been indoctrinated about the human "soul" and how unique it is. I guess this also influences some atheists today who still believe in some sort of supernatural power of the human brain. Even super smart people like Roger Penrose claim that human consciousness is only possible with some weird quantum processes going on in every human brain cell. Well quantum is everywhere. Randomness is part of LLMs. If necessary randomness, even in a computer could easily gained from quantum processes: All you need is to e.g. measure the electrical noise on a Zener diode that is directly generated by quantum tunneling. Now of course this also easily drifts off into philosophical obscurity. What even is e.g. "consciousness". Does it e.g. need the "sensation" of feeling of being confined in a "body"? For sure every sufficiently general intelligence will have a notion of "it self" - you can not have a good model of the world where there is a glaring black spot in the middle that is your own existence.

In general, I think a lot of people have not spent much time into exploring how their own mind really works. How it is also built on neural-network that produce "intuation" and also how easy it is to also trick it into a lot of things. Optical illisions, logical fallacies, ...

... but it is not human

Now this is a typical argument that people bring in when it comes to their job: They need to communicate with other people and they need "feeling" and "emotions" to do their job. And a computer can never do that. And I would say they have a bit of a point here: An AI is not a human intelligence. At least not until we have a 1:1 simulation of the biological hardware that our brain uses. So AI is, at least for now, always some "alien" intelligence. Built differently, trained differently in so many ways.

“If a lion could speak, we could not understand him.” --Ludwig Wittgenstein - also see

So they are right: An AGI is not human. But this does not mean it will not soon beat us in all fields: math, science, engineering, finance, law, etc. When it comes to people who are not afraid of their jobs, this is usually because they also do manual labor. But then again the humanoid robots are just around the corner. And with an AGI in the background these systems will be able to navigate our world just fine.

As for emotions: While an AI will not understands what it means to be human on deep level because it is not human it would still be able to do most jobs that need the handling of human emotions: Detecting micro expressions on a human face or subtle changes in our voice to know about the emotional state of a human? An will be able to do this better then we can. And also simulating what this emotions sound like and look like. So if your jobs is e.g. a salesperson who is really good into tricking other people to buy stuff that they do not need: You will soon be replaced by an AI.

But new technology has always created new jobs

Already 10 Years ago CGP-Grey but out his famous YouTube Video: Humans need not apply. We can always find historical parallels for everything - until we can not. The "Black Swan". The G in AGI stands for "general". Which means it can do everything that a human "generally" can do. It is not specialized. So in the future the question is not: What an AI can and can not do: But what we want to do as humans and which not. If we are still in charge, that is.

But it is all hype by the tech-billionairs

Sure, those people have an interest to sell you new gadgets and thus they have a financial interest in claiming that this is "the future" and maybe overstating the capabilities of their systems. But in the end: All the money that is poured into AI relate tech will only increase the speed in which it is developed. So it is a bit like a self-fulfilling prophecy. People sometimes compare it to the dot.com bubble that burst in 2001. But as you can see: the bursting bubble did not stop the Internet from becoming a dominant technology.

But we do not like where it is going.

Right. I do not like it neither. In fact: With capitalist and imperialist interests behind we have to be extremely worried. Not only that: all the issues that we have in our current society: racism, sexism, misogyny, etc.. can easily be amplified with that new technology. I fully agree: this technology can bring extreme harm and should really be not in the hand of greedy capitalist profit makers. But then again: This would be a reason why we should not underestimate or downplay the significance of this developments. Only if enough people are aware about the fundamental changes this new technology will bring and only if they are aware of how fast this will happen, is there a chance to change the course of history.

The idea that this will all not happen if we just close our eyes and pretend that we do not see it is really absurd. Yet this is what is, especially on the political left the main driving factor of AI denial. They do not like the AI developed by the capitalist tech giants and thus they pretend it will not happen.

The Overton Windows - No One wants do sound like a lunatic

Talking about the fundamental changes that AGI and ASI will bring sounds a lot like Sci-Fi. So even people who are aware that some of the developments are somewhat likely to happen are still worried to sound like lunatics to those who have not thought about these things. The "Overton" has not shifted enough. So it seems some people are starting to grasp what is about to come but are still not vocal about it.

It seems that especially in the scientific community there are a lot of people who are reluctant do speak out. My hope was that when Google hired Ray Kurweil in 2012 as their head of research that this would help. An then with Geoffrey Hinton speaking out and becoming a Nobel laureate, that this would break the ice. But it seems the reluctance to speak out is still there.

People are reluctant to admit mistakes

In the course of this debate, a few people have positioned themselves on some of the AI-denial positions mentioned above. And admitting a mistake is a often not easy. So people try to rationalize their positions and find arguments why they where still "right". So this lack of intellectual honesty is also a part of the wide spread AI denial that we see.

So one could argue: Does not matter much: Within the next 1 or 2 years anyone will see what AGI is capable off. The main issue is: We should already be advocating for political control of AI and for a universal basic income (UBI) and for end of capitalism. Now there is not much time left and certainly we must no waste any more time for our political demands.

Franz Schaefer (Mond). Mai 2025.